Are AI Browsers Safe for the Enterprise

Executive Briefing

TL;DR

AI-native "agentic" browsers can take multi-step actions on a user’s behalf, which changes the risk profile of web browsing. In December 2025, Gartner advised most organizations to block AI browsers for now while enterprise controls catch up. Prompt injection is no longer just about manipulating text. It can influence real actions taken inside authenticated browser sessions. The right path forward is a controlled pilot, clear acceptable-use rules, and upgraded monitoring/incident response before broader adoption.

AI Browsers are Inevitable

AI browser extensions have been available for a while. But the launch of AI-native browsers that include agentic capabilities means that browsers have the ability to take multi-step actions on a user's behalf.

In December 2025, Gartner published an advisory recommending that most organizations block AI browsers for now, at least until controls and governance capabilities catch up. [1]

Historically, prompt injection attacks influenced model outputs (the resulting text). With agentic AI browsers, prompt injection can influence real actions because the agent is operating inside the same browser session and with the effective permissions of the user.

Shadow AI adds pressure. A recent survey reported that employees will use AI tools that violate policy if it helps them finish work faster. [2]

Security Analysis

Risks

AI browsers introduce risks that aren’t fully addressed by the assumptions and controls most enterprises rely on today. Here are the major ones:

Visibility loss - Many AI browsers do not yet offer the same centralized audit logging, SIEM feeds, data-retention alignment, and enterprise reporting that security teams expect from mature browsers. For example, OpenAI's Atlas is explicitly out of scope for SOC 2/ISO attestations, does not emit compliance API logs, and does not integrate with SIEM or eDiscovery today. Some Atlas-specific data types may also not be covered by Enterprise retention/deletion requirements, and Atlas-specific data is not region-pinned. [3]

Broken isolation assumptions - Modern browsers enforce security boundaries like same-origin policy and tab isolation. Agentic AI browsers weaken practical isolation assumptions because the agent can read content and perform actions across tabs and contexts.

Practical example: I asked an AI browser (Atlas) to submit a form in one tab using a summary of content from another tab, and it completed the task.

Lack of least privilege - Agentic AI browsers typically operate with the user's effective privileges in the browser session (cookies, active logins, and whatever the user can access). That makes high-value users (executives, finance, IT, and executive assistants) especially attractive targets for prompt-injection and social engineering. Gartner's warning highlights risks including unsafe autonomous actions and indirect prompt injection.

Data commingling - When browser agents can "see" more context (tabs, history, page content, connected services), you increase the odds that personal and professional data, or low-risk and high-risk workflows, get mixed in ways your policies don't cover.

Controls (and their limits)

AI browser vendors are adding controls, but executives should assume those controls are nascent and evolving.

Prompt injection defenses

Defenses are improving and the industry is getting better at labeling prompt injection behavior through public benchmarks and datasets. However, this is also an active adversarial space: defenses will improve, and so will attack techniques.

Human in the loop

Most agentic AI browsers provide opportunities for human oversight.

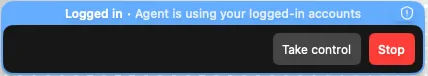

In Atlas, agent mode displays an initial, first-run warning. It also provides the ability to pause/interrupt/take over, and a "logged out" mode where the agent won’t use existing cookies or accounts unless you explicitly approve. Atlas also documents key boundaries for agent mode (e.g., it can’t access saved passwords or autofill data, and it can’t install extensions). [4]

Agent mode with "take control" option

This control only works when users actually pay attention, especially during repetitive workflows where people are most likely to go "hands-off".

Central management

Enterprise browser management (policy, logging, reporting connectors) is a core part of endpoint protection. AI browser management is lagging behind.

OpenAI’s Atlas supports limited MDM-managed preferences, but OpenAI states that Atlas is early access and not yet in scope for key enterprise controls (Compliance API logs, SIEM/eDiscovery feeds, region pinning, and Atlas-specific SSO enforcement/SCIM, among others).

Perplexity’s Comet positions claims to be enterprise-ready with SOC 2 Type II plus GDPR/HIPAA claims, and states that browsing data and AI interactions are stored locally with end-to-end encryption (with settings to delete stored browsing data, saved passwords, sign-in data, and payment information). [5] [6] It also offers Perplexity Enterprise audit logs delivered via webhook. [7]

Chrome Enterprise remains the benchmark for mature centralized controls, including reporting connectors that can send browser security events to third-party providers. [8]

Here's a high-level comparison (based on public documentation as of Dec 2025):

| Feature (core enterprise controls) | Atlas | Comet | Chrome Enterprise |

|---|---|---|---|

| Centralized policy mgmt. / MDM support | ⚠️ Limited (managed preferences via MDM; broader coverage still limited) | ⚠️ MDM deployment; org policy depth varies by plan/docs | ✅ Full policy mgmt. via admin console |

| Disable agent / AI mode | ⚠️ Org-level controls exist, but Atlas-specific governance is limited / early access | ❌ Not clearly documented as an org-wide "off switch" | ✅ No agent mode (not applicable) |

| Extension allow / block lists | ⚠️ Supported via MDM keys | ❌ Not clearly documented | ✅ Mature extension governance |

| Browser reporting / logging | ❌ No Compliance API logs, SIEM, or eDiscovery feeds | ⚠️ Audit logs via webhook for Enterprise (scope may not equal "browser security event" feeds) | ✅ Reporting connectors to third-party providers |

| Data controls (retention, deletion, residency) | ⚠️ User-level clearing exists, but some Atlas data types aren’t covered by Enterprise retention/deletion; not region-pinned; not recommended for regulated, confidential, or production data | ✅ Local E2E storage and user deletion controls; enterprise retention options (plan-dependent) | ✅ Mature admin policy controls and enterprise-grade reporting connectors |

| SOC 2 / ISO 27001 | ❌ Not currently in scope for SOC 2 / ISO attestations | ✅ SOC 2 Type II + GDPR/HIPAA claims (per vendor docs) | ✅ Covered by Google’s enterprise certification programs (program-level) |

Legend: ✅ Available ⚠️ Limited/partial ❌ Not available

If your organization currently depends on enterprise browser security controls for governance and incident response, delay broad adoption until you can implement the controls needed to meet customer, regulatory, and risk requirements.

Next Steps

The priority is to create a plan for safe adoption. Assume that AI browsers are here to stay, and assume that some users will try them, with or without approval.

Here is a list of next steps to help you get ahead, or stay ahead:

Pilot: Identify a small pilot group that balances business value with risk. Choose workflows heavy on research/docs/internal communications and light on regulated or highly sensitive data (avoid exec assistants, finance ops, M&A, HR casework).

Gap analysis: Compare your required browser controls (policy, logging, DLP, audit, identity) against what the AI browser actually supports today.

Shadow-AI discovery: Use proxy/DNS/SWG (secure web gateway) logs and EDR telemetry to identify AI browser user-agents and destinations.

Classify findings as "block now", "monitor" and "pilot candidates".

Send a short, targeted message: acknowledge productivity intent, explain risk plainly, and offer a path into the official pilot.

Phased approach: Define adoption phases and the controls required for each:

Phase 0 - Blocked: Block AI browsers/extensions at network and endpoint levels.

Phase 1 - Controlled pilot: Small allowlist with clear business justification and increased monitoring.

Phase 2 - Limited adoption: Expand only for teams that meet data profile and training criteria.

Phase 3 - Broad adoption: Allowed with policy, monitoring, and periodic reviews.

Publish AI browser acceptable-use rules:

Map use cases to your data classification scheme.

Create a simple "never use with AI browsers" list (e.g. customer PII, payment card data, PHI, secrets, etc.).

Define allowed tasks vs. prohibited tasks with examples.

Integrate into AI policy, security training, and onboarding.

Upgrade monitoring and incident response:

Close visibility and tooling gaps.

Update threat models and IR runbooks (prompt injection, cross-context/tab exfiltration, agent misuse).

Add SOC detection signals for agent patterns (rapid multi-step web action, unusual destination spikes, anomalous form submissions, etc.).

Add "rogue browser agent" to a future tabletop exercise.

Your plan will vary based on your risk tolerance and tooling. But having a plan is what prevents this from turning into a quiet, unmanaged adoption.

Final Thoughts

Early-stage product teams prioritize user experience and growth. Security improvements usually come later, after the pain of the problem exceeds the pain of the solution.

This problem is often compounded because product teams use the wrong metric. Traditional risk scoring systems like CVSS can be useful for software vulnerabilities, but they often fail to capture the true business risk of agent misuse and prompt injection attacks.

A practical example: From the product team’s perspective, a prompt injection attack against a single user may only register as a Medium severity risk (CVSS 6.0). But for the enterprise, that same issue may be a critical risk, especially if the impacted user is an executive or a member of the IT or Finance team.

Gartner’s warning gives security leaders temporary air cover to be intentional about adoption. But executives should assume the conversation will shift from “not yet” to “how fast can we safely enable this.” Build the adoption plan now so you aren’t forced into a rushed, suboptimal plan later.

Whenever you're ready, here are 3 ways I can help:

Work Together - Need a DevSecOps security program built fast? My team will design and implement security services for you, using the same methodology I used at AWS, Amazon, Disney, and SAP.

DevSecOps Pro - My flagship course for security engineers and builders. 33 lessons, 16 hands-on labs, and templates for GitHub Actions, AWS, SBOMs, and more. Learn by doing and leave with working pipelines.

Career Hacking Quest – A practical course and community to help you land security roles. Bi-weekly live resume reviews, interview strategies, and step-by-step guidance for resumes, LinkedIn, and outreach.

Subscribe to the Newsletter

Join other product security leaders getting deep dives delivered to their inbox for free every Tuesday.

Follow us: